In this section I will only discuss human-like agents. The projects and theories I describe are only a small fraction of what is available and they have been selected with my project at mind.

In agent theory the term believable refers to how agents appear to a human spectator or user. As I will discuss in this part of the text, agents may be considered believable due to many factors: appearance, manoeuvring, behaviour, intelligence etc. Some scientists have the standard assumption that believability is directly dependent on how well the agent looks and behaves like a human (for instance [38] and ). Here the primary function is to model life as to better understand it. The purpose of simulations based on such models is most often to investigate human behaviour in certain environments or to get some ideas of how the human mind works. In all the more precise a model is, the more believable it is.

Other scientists however see believability as something else than precision of the simulation. Their more nuanced idea of believability is that the purpose is to get the right input into the human observer or the right feedback to the user. That is: believability is about the human-computer-relationship. Such appliances of agents are mostly seen as computer-animations used in movies, in computer games, toys, desktop help-agents, and the like.

This is the time to decide if I focus at modelling humans as precisely as possible to better understand them, or I aim a making the agents appear believable. As you might have snatched from the previous, the purpose with this project is to produce agents that appear believable but not necessarily work internally as humans.

One of the scientists that see believability as a means of human-computer relationship is the computer scientist Kerstin Dautenhahn [29]. She investigates how robots should be designed so that they are acceptable to humans and their application domain. Her aim is to build a robot that appears believable.

One thing is how precise a human-model we make, another thing is to what degree of believability we do it. Dautenhahn discusses this issue and puts up the three primary reasons to why believability should be modelled as precisely as possible and comments them.

Firstly, for scientific investigation it is important to have as believable a model as possible. This is where degree of believability and degree of precision are linked together. In the case of scientific study of the human mind, believability should of course be as precise as possible.

Secondly, the agents are supposed to act as humans, to interact with real humans or adopt roles and fulfil tasks normally done by humans. Therefore human-like agents should possess as many human qualities as possible. Dautenhahn does not agree with this idea. How things look and behave has a clear correspondence with what functions we believe they have. For instance, when some new device is put on the market it normally has some clear design difference from previous models, to give an impression of the new functions. This has escalated in some areas, where some devices, take a Japanese stereo, today have more buttons and displays than a nuclear power plant. Applied to agent-human interactions a very believable agent would also raise expectations of its capabilities. If people are presented with a humanoid appearing and behaving robot they would expect it to be equally functioning as a human and thus focus on the things where the robot does not fulfil the behaviour of its appearance.

Thirdly, humans prefer to interact with other humans. As we know how humans behave they are the ideal partners as computer agents. Dautenhahn sees that this has shown not to be entirely true. Human often choose reliability or predictability prefer to humans. It could be fun to use a text-editor that works as a secretary or perhaps a critic but mostly authors would prefer to be left alone with their muse.

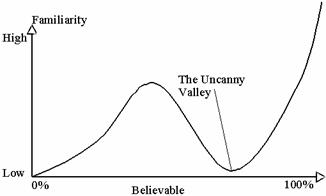

Dautenhahn suggests that believability should not be too precise. An agent that appears more realistic than it actually is raises expectation to a level where they cannot hold. This she calls the zombie-effect from the idea of the philosopher's zombie described previously: beings that act and look like humans but in fact have no mind. The zombie-effect is the chock that one experiences then he realises that the agent is actually far more primitive than firstly assumed. Figure 12 is a graph that illustrates this:

Figure 12 Dautenhahn's graph, explanation in the text below.

The horizontal axis in the graph corresponds to how believable, or similar to a human, the agent was made. It is set in percentage so 0 percent is completely non-realistic appearance and 100 percent represents an agent that is completely human. On the vertical axis familiarity is shown. This is to indicate how well the agent-human relationship goes on. A low value means that the human does not understand and feel comfortable with the agent and a high value is exactly that. The familiarity-axis is to show the tendency in the agent-human relationship and therefore does not have values -also the exact curve might be a little off from the actual scientific study.

The tendency in the graph is clear: either produce a complete replica of a human or aim at the local maximum. The "valley" in the graph is referred to as the uncanny valley because agents in this area seem uncanny to human users after inspection.

To exploit this knowledge Dautenhahn has made a series of robots with different kinds of appearance. The robot was thought as a playmate to bring autistic children together. The best result was reached with a simple wheeled robot without any fur, eyes or other appealing features.

The result Dautenhahn presents is nothing new to cartoonists. If a cartoon is too realistic the reader will instinctively relate it into his own world and become uncanny about the mismatches that there eventually are. Any good cartoon, or fiction for that matter, builds up its own universe and makes it clear to the reader what genre this is. One would never hear a critic say that Donald Duck is an unrealistic character.

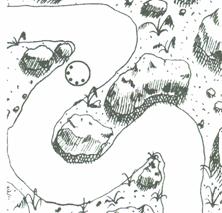

Katrin Hille [28] reinvents some of these cartoonist-rules by her own empiric investigation. More precisely she looks at how agent-human relationships may be established through simple visual effects: how the tempo, focus, precision etc. in behaviour reveal emotions. She has a very simple agent visualised as in Figure 13 below.

Figure 13 , Hille's agent.

The large outer circle is the body of the agent and the small inner circles are internally mobile so they may rearrange in new patterns or even disappear and reappear. The expressions are determined by four parameters: activation, externality, precision, and focus. These small circles are then one way for the agent to express its state by rearranging them in different ways. The other way is to adjust its speed and how well it stays on the track of a small predetermined route showed in Figure 14 below. For example a frightened agent will move quickly and have its small circles close by each other in the inner of the body. A sad agent will move slowly in contrast.

Figure 14 , Hille's agent on route.

The agent's states are six different emotions: anger, anxiety, contentment, excitement, fear, and sadness. These have each a response pattern in correspondence with the agent's expressive parameters. For instance anger gives the agent low precision but otherwise high values. This results in that the agent moves quickly but not in a straight line and internally the agent's small circles pulsates quickly from the centre and forwards like in Figure 13b.

This was a very quick view on what Hille has done but the point is that she shows empirically that people are able to read emotions from these simple expressions. She does this by having a group of students watch the agent and then tell which of the six emotions are displayed and they mostly guess the right emotion. This is interesting because it shows that many other things than just facial expressions and direct actions can be used to give an impression of emotions and internal states. Hille concludes that emotions can be recognised without information about their context. This means that the observer does not have to know the individual or anything about its state in order to read some of its emotions. In the case of this simple agent no resemblance with humans are to be found. The agent only has some basic behaviour patterns such as "sad equals slow" which are apparently enough for human spectators to associate this emotion to it.

From this fact, that emotions may be communicated through simple motor-functions, Hille concludes that physiology is an important part of emotions. Therefore behaviours should display emotions in order to properly communicate them. To design a believable agent one would then have to include emotional states into the behavioural system, just as we would expect it with physics (an injured person would limp for instance).

Vosinakis and Panayiotopoulos present a complete solution to making believable agents called SimHuman [47]. This includes both a mind and a body model, and the 3D environment the agent lives in. Such an approach is much like what I am doing in this project.

They also differentiate between scientific simulation systems and systems that are "just" believable and do their work in the last category. To produce believable agents their idea is that an agent "should not only look like, but also behave as a living organism" [47]. That includes agent-environment interaction on a more practical level than previous described: to embed the agent into a 3D model of the world the agent-environment has to be specified more precisely than just input/output. In the previous described models (see chapter ) there was a clear idea of what an event was. It could be encountering a food source or being faced with a monster, all discrete events with a clear subject. When the environment is modelled in greater detail the visualisation of the body and computation of physics become an important part of the model. Compared to the philosophical ideas of the presented AI-models these new practical challenges are collision detection, equipment, locations, etc. To transform a theoretic model, as for instance the Will-architecture, into an agent embedded in a 3D environment many new features would have to be added that does not necessarily have to do with the mind model. How should the agent get from one location to the other, how he perceives the world, how does the physics work are some of the general questions. Also minor problems have to be examined: how are the limbs joined together, how do his limbs correspond to the different interaction tasks, and how to turn in the right direction are examples.

The problem discussed by Dautenhahn (4.1), how believable should agents be Vosinakis and Panayiotopoulos believe has a "true solution": models should not have too many physical details and neither too much emphasis on animating personality. They claim that systems do not have to be completely believable (and therefore are on the side of Dautenhahn) but it comes down to a performance issue instead of the "uncanny valley". Their aim is to have acceptable-believable agents that work in real-time. Real-time means that the internal time of the situation and the outside-computer-time are in sync. Run-time performance is a new and technical question that actually shows to be a mayor design issue when one takes the ideas from the drawing board and into the computer. Even if you have the world's largest computer, if your agent's mind contains exponential algorithms or other time consuming or space consuming features, it is just not possible to get it to run in real-time.

The impact of this on producing believable agents is that you have to keep performance strongly in mind when you design the model. In SimHuman they solve the problems by the obvious means: collision detection on every object, perception is the result of ray-casting (see also [49]). I have another solution described in .

Dautenhahn discussed why things should not be too realistic. If the agent looks more intelligent or socially capable than it actually is, the human observer will get an uncanny sensation when he figures this out. Therefore she suggests that the agents are kept at a minimum of the necessary believability or at least as caricatures in a cartoon-like fashion.

Hille concludes that bodily postures, gestures, how things are done, are important parts of reading the state of the agent. Besides the obvious, that a human-like agent that moves like a robot is not very believable, motor-functions may be used to communicate emotions even without facial expressions, sounds or other direct means.

Lastly the SimHuman project teaches us that modelling physics result in new problems. Therefore they should be a part of the model from the beginning.

An important part of social agents is how they work as a group and as such interact with the environment. Should agents be able to own object or other agents and how should agents coordinate group behaviour. The benefits for the individual agent to be able to function as a part of groups and submit to norms and morals are clear as a group may share and persuade a common goal [18]. I now survey a selected area of these ideas.

Crowds are a kind of group behaviour. Often people form crowds deliberately as to achieve a common goal but mostly crowds just emerge when people are present in the same environment. The results of crowds are a collective behaviour (a kind of swarm intelligence [19]) that both has its own life and in most cases helps the individuals achieve their goals. These are also the two perspectives we may take on crowds: a means for the individual to cooperate with a multitude of other individuals or as an organism of its own that has a single goal it pursues.

Daniel Thalmann discusses how crowd behaviour can be simulated in a believable multi-agent system [15]. His approach is to start off with an agent architecture that does not support any group behaviour and then extend it based on crowd theory. Thus the agents remain autonomous which corresponds with the idea that crowds arise from the member being focused on the other members' activities rather than a single mind controls it.

Thalmann suggests that agents in a crowd may choose behaviour due to one of three things: firstly, explicitly programmed crowd behaviour, where the crowd has its own life. Secondly, reactive or autonomous events may determine the agent's behaviour. This is the idea that the crowd behaviour arise as a result of many individual interlocked behavioural decisions. Thirdly, the crowd may be guided by a single agent, a leader.

Thalmann differs between social and hierarchical relationships. He claims that hierarchical relations are much more stabile than social ones. This is one of the reasons that the correspondence between each kind of relationship and behaviour should be implemented explicitly and not just as a standard form. He writes: "In order to realistically simulate how humans interact in a specific social context, it is necessary to precisely model the type of relationship they have and specify how it affects their interpersonal behaviour" [15]. He suggests some relations that are important to model believable agents: dominance, attraction, hierarchical rank, emotional expressiveness. These are features that according to Thalmann should be modelled separately and their impact on behaviour, communication, group behaviour and so on.

Nishimura and Ikegami [48] have another approach to modelling social behaviour. The individuals in their model do not communicate by interaction but by changes in their relationship. In this case a relationship is for instance "one follows the other". Then influence on the other agents is done in an indirect manner. For instance, if one agent stops following the other, then the other agent might notice when he is about to do a task that requires a second agent. This might result in the agent learning under what conditions he will gain followers and how himself to change relationships to reach the desired goals. This approach does not seem suitable to make believable human-like agents but it is an interesting idea to model this kind of emergent social behaviour.

A concrete example of social behaviour is presented by Delgado-Mata and Aylett [8]. Their aim is to develop a virtual smell sensor that their agents may use as a means of communication. The agents in their model do not have any means of connecting to each other as for instance agents do through the connector in the PECS-architecture, (see ). Instead they must establish a connection through physical means in the environment, in this case scent and smell but it might as well have been light and vision or sound waves and ears. The odour molecules are emitted continuously from all agents in the environment and reflect states such as emotions and physics. To sense the smells the molecules are distributed in the environment due to wind flows and time of day and the like.

For example, if an agent encounters a monster he will emit a "fear"-pheromone. As he is eaten or runs away the scent-particles will flow in some direction and might reach the smell sensors of other agents. How these agents turn could have influence on how well the scent is picked up. These other agents that catch the scent of "fear" react on the input as if they had themselves encountered a monster. They would then run away and educe more "fear"-particles and eventually a large group of agents run away. The agents in the opposite wind direction will not get the message of "fear" and the monster may then eat them one at a time.

This approach does not seem to fit human-like agents but if it modelled visual or audio communication it seems a very realistic approach to model inter-agent communication. However, the response pattern to a direct experience and one that is perceived through communication ought to be different. Even though an agent is best off running away from a monster, smart agents might gang up and together defeat it.

If you want to model an environment that the agents may interact with, it seems necessary also to have model some kind of morale, norms or ownership relations. Otherwise the agents would not have any concept of how objects fit into the world except their usage. A simple example is for agents to determine when to drop picked-up items after use. Here the designer could explicitly program that equipment is dropped after use or he could program the agents with some sense of morale and ownership and let it determine for itself whether it wants to steal the object or drop it.

Flentge et al. has written a paper on norms in agent societies [20]. They define norms: "A norm can be considered as a general behaviour code that is more or less compulsory and whose non-observance is punished by sanctions" . Norm theories can be used to model multi-agent systems that mimic the difficulties typically found in human societies: how agents approach other agents or treat equipment. They claim that norms have further benefits to social agents: it makes behaviours more predictable and reduces the complexity of the social situations to the observer. If we want to make believable agents, it is important that the observing human can keep track of what is going on.

The norms they discuss are concerned with the possession of goods. Thus the norms in their case are related to acts of possession of goods. These goods are essential to survival of the agents in their model. The possession norms emerge as several agents agree on what possessions are claimed by whom. When an agent wishes to possess some goods he marks it but only if the other agents accept the possession will he benefit from the goods.

Norms propagate in the society much like Dawkins' memes [40]. Memes are the building blocks of cultural tradition just as genes are to genetic information transfer. The idea is that memes move from one individual to the other and so imitates the behaviour of the other. In the model Flentge et al. describes there are two memes: the possession meme and the sanction meme. Agents that have an active possession meme respects the other agents' goods and does not use them, agents that do not respect the norms use whatever goods they find. The sanction meme determines behaviour when it is observed that someone violates the norms (uses someone else's goods). A sanctioning agent that observes such a criminal act will punish the vandal so he has less change of surviving. The results are that agents that respect the norms do much better under conditions where there is shortage of goods.

The idea of norms seems like a good general solution that solves the proposed problems on agent-environment interaction. I have done some preliminary work on norms, see appendix A otherwise my solution to the problems is described in .

A definition of morale is to put other peoples' interests ahead of ones own [39]. From a Darwinian perspective selfish people would have better change of surviving than people with high morale and thus populate the planet. However cooperation has a clear evolutionary benefit as well and trust and morale are an indubitable part of that. The question is where the line goes between naivety and beneficial trust.

The prisoner's dilemma (see [39] and ) is an example of such a problem. The idea is that two people individually have to make a decision whether to trust the other. If neither trusts the other then they both get little benefit. If one trusts and the other does not then the person that does not trust receives all benefit. However if the two persons trust each other then they both get an average benefit. This is illustrated in Figure 15. Here the higher value the more benefit.

Figure 15 , the prisoner's dilemma with trust.

There are many different strategies towards the problem but "tit-for-tat" has shown to work well: initially trust the other persons and then on do whatever they did last time. The success of the strategy lies on that un-trustworthy characters only have one chance of cheating the others and then on any attempt to better one self will be cheated in reverse. Then a community of "tit-for-tatters" will in the long term do better than an untrusting society.

Conclusively morale and trust seem to have reasonable explanation from an evolutionary perspective. If we want to model cooperating agents this kind of long term mutual benefit could be used to motivate agents into social interactions.

This was a very short walkthrough of the subjects: believability and social interactions. Every year more than 1000 new papers are printed on these subjects so the amount of literature is enormous. The papers and theories describes here all have importance for my project but others should have been represented as well.

|